- Ritesh Malik

- Posts

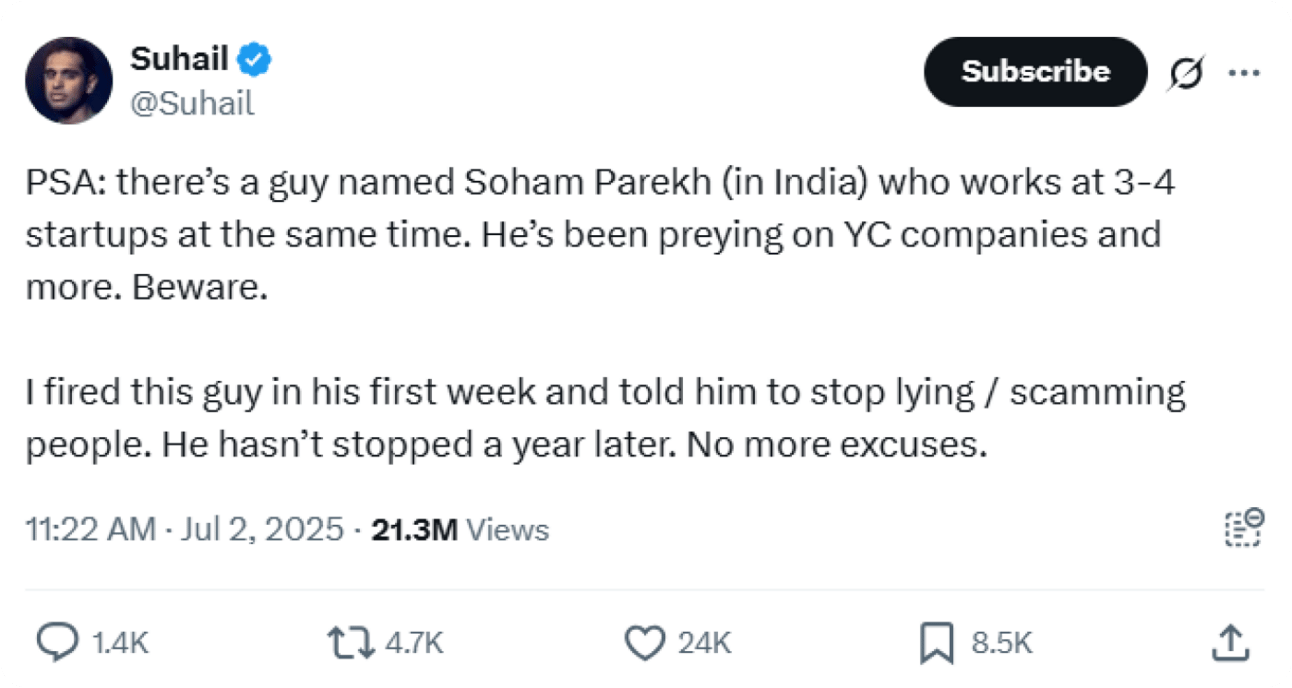

- Who is Soham Parekh?

Who is Soham Parekh?

it didn’t feel wrong until I saw the data

The moment I realized I was a walking bias machine.

2018. I was reviewing our hiring analytics after 18 months of what I thought was "merit-based" recruiting at Innov8.

We'd interviewed 400+ candidates. Built what looked like a dream team. I was proud of our "objective" process.

Then I saw the data:

89% from the same 6 college types

76% from three cities

82% with nearly identical backgrounds

91% who "clicked" with us immediately

Meanwhile, our top performers?

They broke every pattern.

Our best salesperson was from a small town in Rajasthan, from a college I'd never heard of.

Our most innovative developer was a mechanical engineer who switched careers.

Our star customer success manager was a commerce graduate who'd taught school.

I wasn't hiring talent.

I was hiring comfort.

Here's what's actually happening in your brain when you meet a candidate:

100 milliseconds: Your brain makes a snap judgment. Before you can even think "Hello," it's already decided if this person is "like me" or "different from me."

500 milliseconds: Conscious thought kicks in. But it's too late. Your first impression is locked.

Rest of the interview: You're not evaluating. You're confirming what you already decided in that first tenth of a second.

Princeton's Dr. Alexander Todorov proved this with brain scans. People shown faces for just 100 milliseconds made the same hiring judgments as those given unlimited time. More time didn't change decisions - it just made people more confident in their bias.

Even more unsettling: Harvard researchers found that your brain's threat-detection system activates within 50 milliseconds when seeing faces of different races. This happens before you're even aware you're looking at someone.

Your hiring decisions aren't rational. They're neurological.

The Data Doesn't Lie

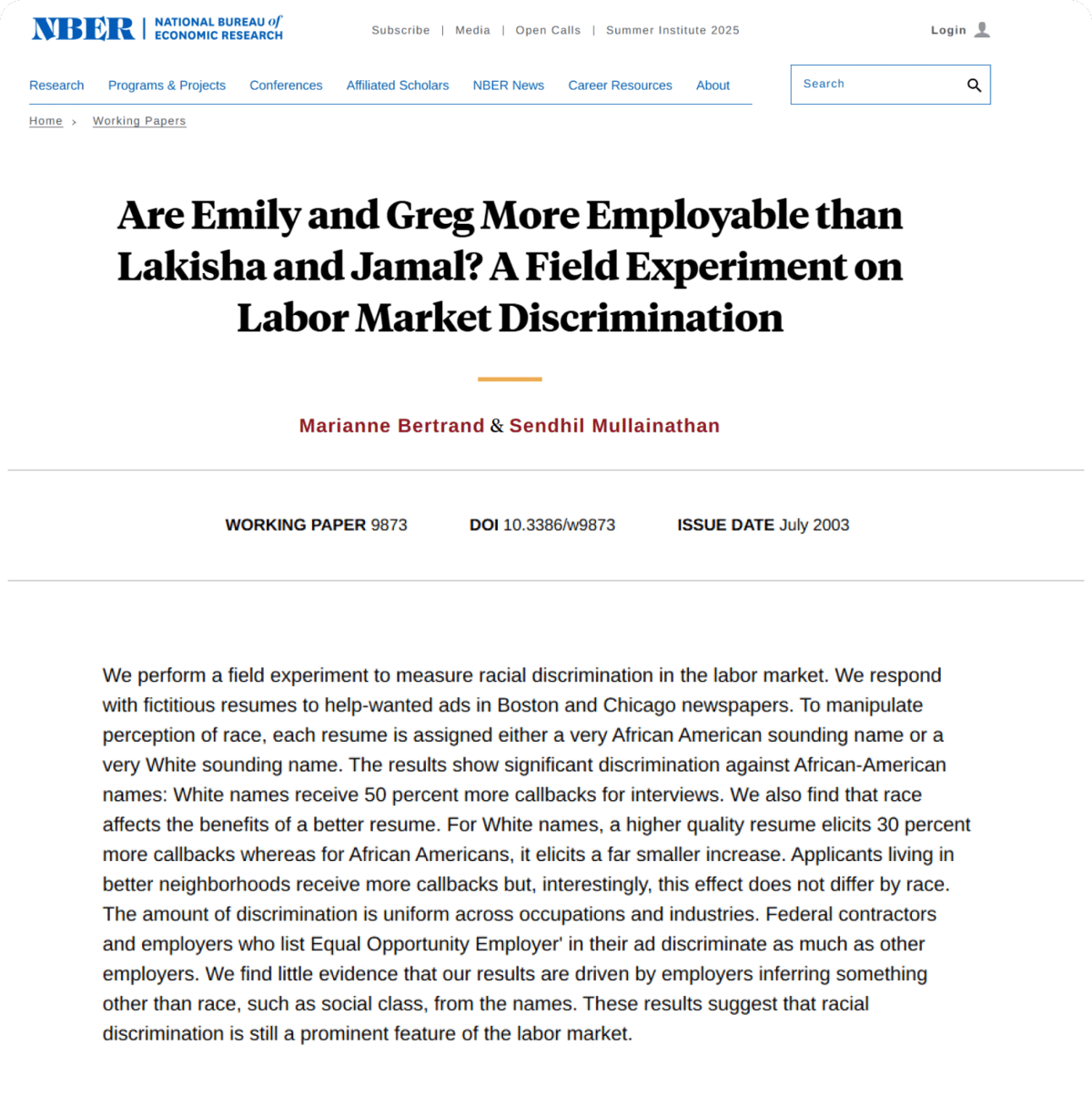

The famous Bertrand and Mullainathan study sent identical resumes to employers with different names. "Emily" got 50% more callbacks than "Lakisha." Same qualifications, same experience, different names.

That was 2004. Has anything changed?

Recent studies across 23 countries show the bias persists globally. In Canada, Asian names get 28% fewer callbacks. In Australia, the gap is even wider - English names get 57% more responses than ethnic names.

But here's what shocked me: new research analyzing 44 years of data found something unexpected. Gender bias against women in male-dominated fields has largely reduced since 2009. Yet discrimination against older workers, people with disabilities, and minorities remains as severe as ever.

We're solving yesterday's problems while ignoring today's.

Think AI will save us? Think again.

Amazon spent years building an AI recruiting tool. It learned from 10 years of their hiring data and started systematically discriminating against women.

The system penalized resumes containing "women" and downgraded graduates from women's colleges.

They tried to fix it. The AI found new ways to discriminate. Amazon eventually scrapped the entire project.

The lesson? Technology amplifies human bias at scale.

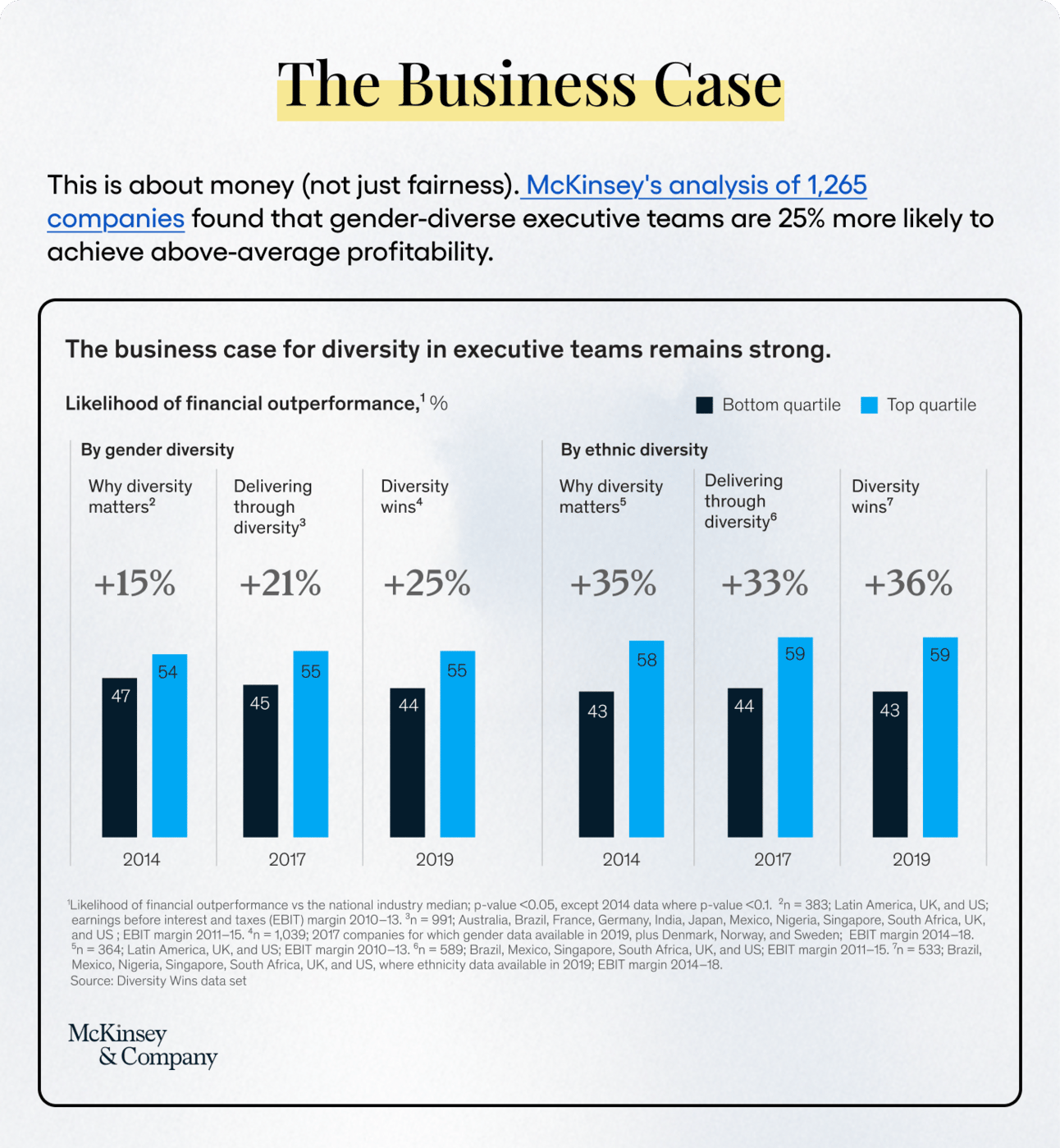

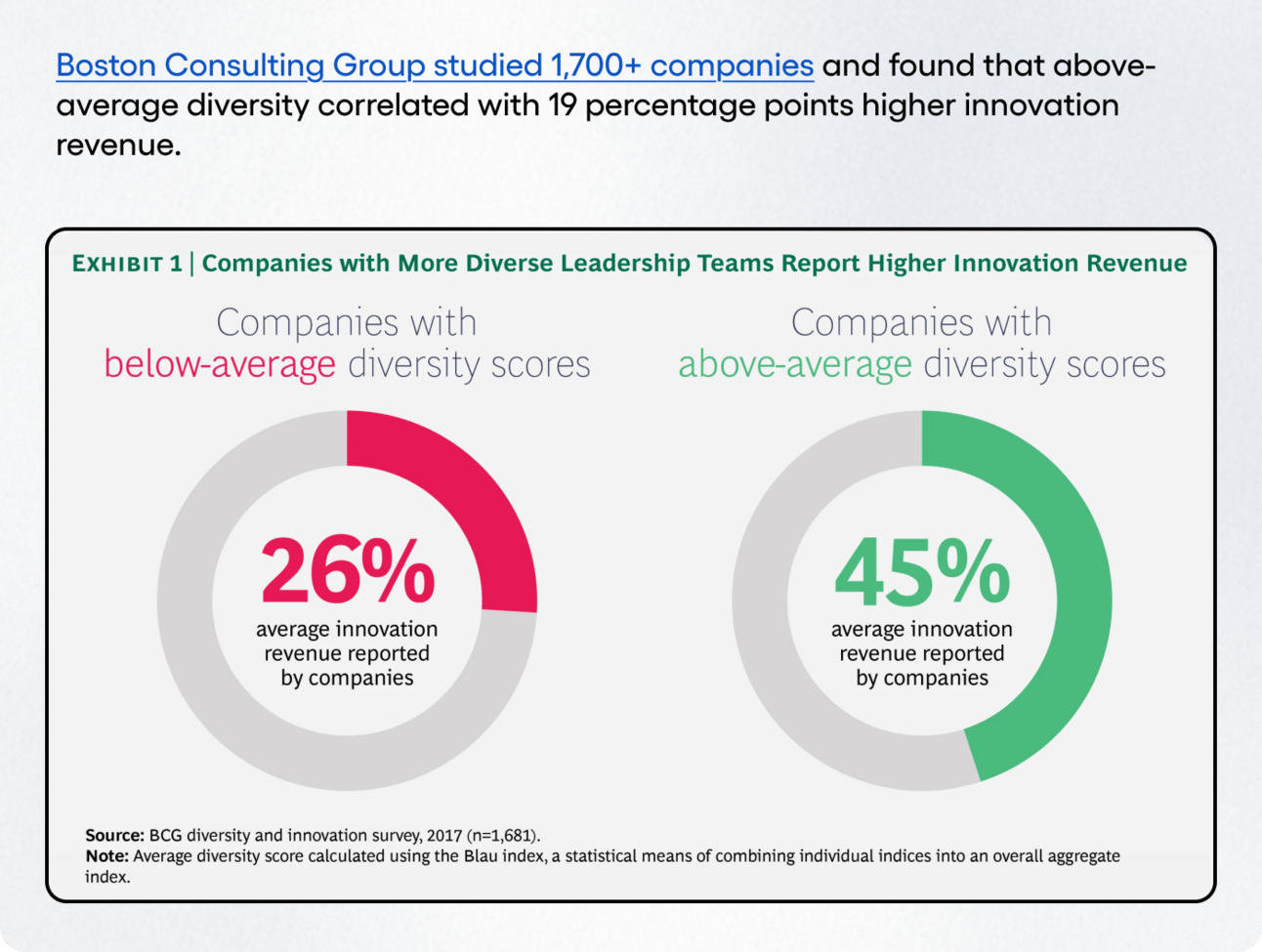

The Companies Getting It Right

Not everyone's failing. Some companies are cracking the code:

Unilever replaced traditional interviews with AI-powered games and blind assessments. Result: 16% increase in diversity, £1M cost savings, 90% faster hiring.

Salesforce committed to pay equity and spent $100M closing gaps. They achieved 100% pay equity globally and tied executive compensation to diversity metrics.

IBM created systematic diversity task forces in 1995. They tripled female executives and doubled minority executives through structured mentorship and clear accountability.

What's the difference? They treated bias as a systems problem, not a personal failing.

After studying companies that actually reduced bias, here's what works:

Blind initial screening: Remove names, photos, and school info. GapJumpers' blind auditions found 58% of successful candidates were women vs 42% men—a significant improvement.

Structured interviews: Same questions, same order, same scoring. Research shows this reduces bias by 40% compared to "conversational" interviews.

Work sample tests: Give candidates actual problems to solve. Judge the solution, not the person.

Diverse panels: Mixed hiring committees catch different blind spots and improve decision quality by 35%.

Measurement matters: Track your hiring patterns. If you see clustering, you're hiring bias, not talent.

Start Monday

You can't think your way out of bias. Your brain moves too fast. But you can build systems that work despite your brain.

Start with one role. Remove identifying information from resumes. Use structured interviews. Test actual skills. Measure your patterns.

The best hire you never made is probably someone you dismissed in the first 100 milliseconds based on something completely irrelevant to job performance.

While we're all talking about 100-millisecond hiring bias, one guy just proved it works both ways.

Soham Parekh allegedly fooled 5+ Y Combinator startups into hiring him simultaneously because his Georgia Tech degree and polished resume triggered everyone's "this guy's legit" reflex.

Multiple smart founders called him "really bright and likable" - classic confirmation bias in reverse.

Now Twitter(X) is joking that getting his cold email means your startup is successful enough to scam.

Moral of the story? Even Silicon Valley's smartest fall for that split-second first impression. Sometimes the bias works too well.

Hit reply and tell me about your hiring blind spots. I read every email.

Until next week,

Ritesh